Video: SpringOne 2GX - Polyglot Persistence for Java Developers

One of the top rated sessions from SpringOne 2GX 2011 was Chris Richardson's talk about Polyglot Persistence for Java Developers - Moving out of the Relational Comfort Zone. In this talk, Chris provides a great overview of the new non-relational storage options available to Java developers, including Cassandra, Redis and MongoDB. He then talks about the practical matters of when and how to incorporate them into your applications. If you haven't already been experimenting with noSQL datastores then this presentation provides the perfect introduction for you.

Many thanks to InfoQ for coming to Chicago to record so many of the fantastic SpringOne 2GX presentations.

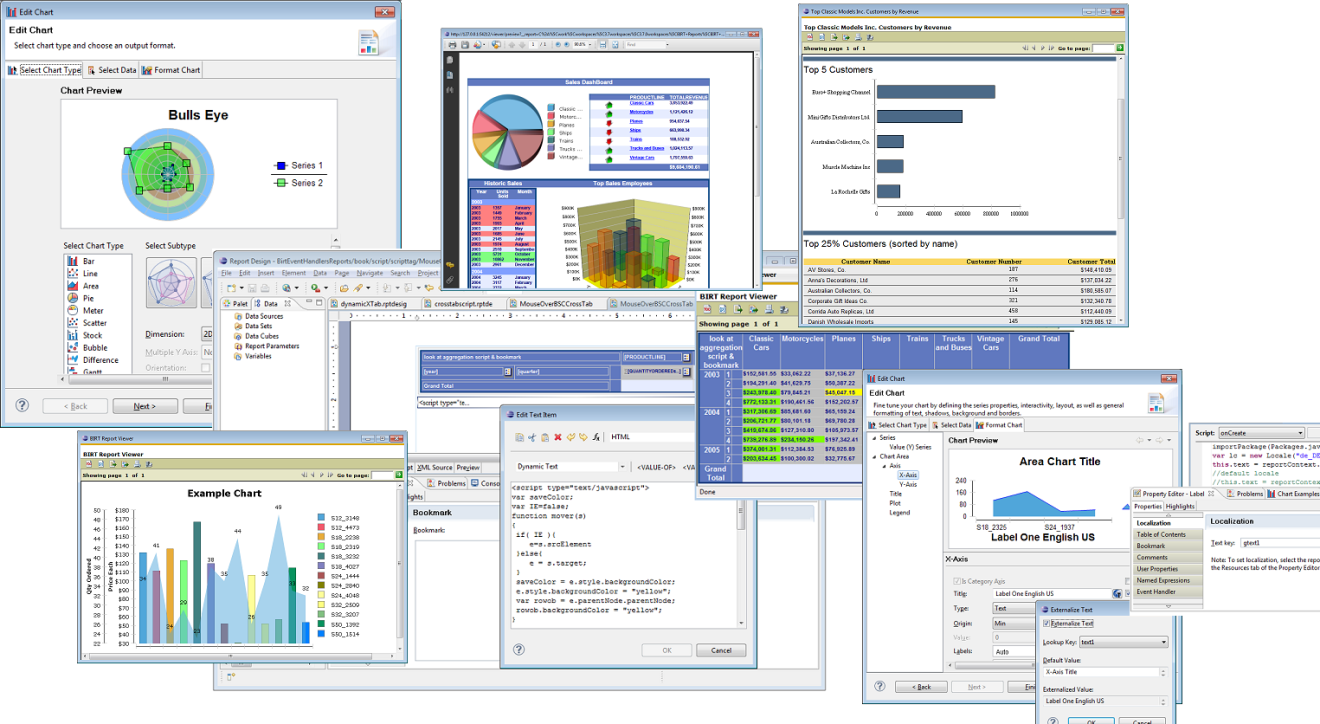

Figure 1 BIRT Collage

Figure 1 BIRT Collage