Get ahead

VMware offers training and certification to turbo-charge your progress.

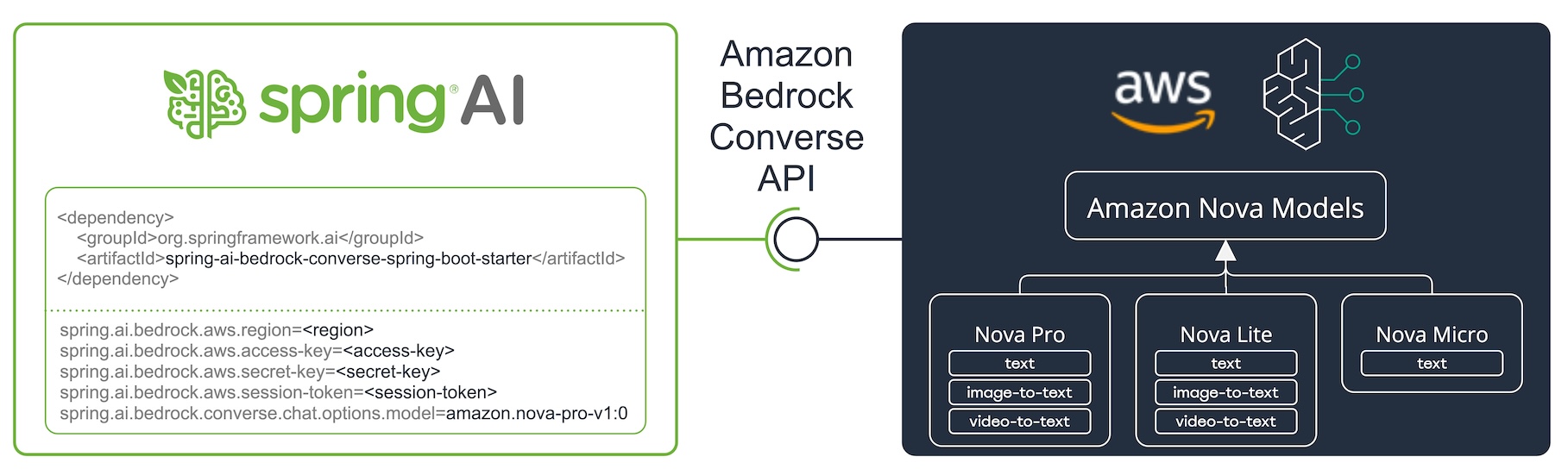

Learn moreThe Amazon Bedrock Nova models represent a new generation of foundation models supporting a broad range of use cases, from text and image understanding to video-to-text analysis.

With the Spring AI Bedrock Converse API integration, developers can seamlessly connect to these advanced Nova models and build sophisticated conversational applications with minimal effort.

This blog post introduces the key features of Amazon Nova models, demonstrates their integration with Spring AI's Bedrock Converse API, and provides practical examples for text, image, video, document processing, and function calling.

Amazon Nova offers three tiers of models—Nova Pro, Nova Lite, and Nova Micro—to address different performance and cost requirements:

| Specification | Nova Pro | Nova Lite | Nova Micro |

|---|---|---|---|

| Modalities | Text, Image, Video-to-text | Text, Image, Video-to-text | Text |

| Model ID | amazon.nova-pro-v1:0 | amazon.nova-lite-v1:0 | amazon.nova-micro-v1:0 |

| Max tokens | 300K | 300K | 128K |

Nova Pro and Lite support multimodal capabilities, including text, image, and video inputs, while Nova Micro is optimized for text-only interactions at a lower cost.

AWS Configuration: You need:

Spring AI Dependency: Add the Spring AI Bedrock Converse starter to your Spring Boot project:

Maven:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-bedrock-converse-spring-boot-starter</artifactId>

</dependency>

Gradle:

dependencies {

implementation 'org.springframework.ai:spring-ai-bedrock-converse-spring-boot-starter'

}

Application Configuration:

Configure application.properties for Amazon Bedrock:

spring.ai.bedrock.aws.region=us-east-1

spring.ai.bedrock.aws.access-key=${AWS_ACCESS_KEY_ID}

spring.ai.bedrock.aws.secret-key=${AWS_SECRET_ACCESS_KEY}

spring.ai.bedrock.aws.session-token=${AWS_SESSION_TOKEN}

spring.ai.bedrock.converse.chat.options.model=amazon.nova-pro-v1:0

spring.ai.bedrock.converse.chat.options.temperature=0.8

spring.ai.bedrock.converse.chat.options.max-tokens=1000

For more details, refer to the Chat Properties documentation.

Text-based chat completion is straightforward:

String response = ChatClient.create(chatModel)

.prompt("Tell me a joke about AI.")

.call()

.content();

Nova Pro and Lite support multimodal inputs, enabling text and visual data processing. Spring AI provides a portable Multimodal API that supports Bedrock Nova models.

Nova Pro and Lite support multiple image modalities. These models can analyze images, answer questions about them, classify them, and generate summaries based on provided instructions. They support base64-encoded images in image/jpeg, image/png, image/gif, and image/webp formats.

Example combining user text with an image:

String response = ChatClient.create(chatModel)

.prompt()

.user(u -> u.text("Explain what do you see on this picture?")

.media(Media.Format.IMAGE_PNG, new ClassPathResource("/test.png")))

.call()

.content();

This code processes the test.png image:

with the text message

with the text message "Explain what do you see on this picture?" and generates a response like:

The image shows a close-up view of a wire fruit basket containing several pieces of fruit...

Amazon Nova Pro/Lite models support a single video modality in the payload, provided either in base64 format or through an Amazon S3 URI.

Supported video formats include video/x-matros, video/quicktime, video/mp4, video/webm, video/x-flv, video/mpeg, video/x-ms-wmv, and image/3gpp.

Example combining user text with a video:

String response = ChatClient.create(chatModel)

.prompt()

.user(u -> u.text("Explain what do you see in this video?")

.media(Media.Format.VIDEO_MP4, new ClassPathResource("/test.video.mp4")))

.call()

.content();

This code processes the test.video.mp4 video  with the text message

with the text message "Explain what do you see in this video?" and generates a response like:

The video shows a group of baby chickens, also known as chicks, huddled together on a surface ...

Nova Pro/Lite supports document modalities in two variants:

Example combining user text with a media document:

String response = ChatClient.create(chatModel)

.prompt()

.user(u -> u.text(

"You are a very professional document summarization specialist. Please summarize the given document.")

.media(Media.Format.DOC_PDF, new ClassPathResource("/spring-ai-reference-overview.pdf")))

.call()

.content();

This code processes the spring-ai-reference-overview.pdf document:  with the text message and generates a response like:

with the text message and generates a response like:

Introduction:

- Spring AI is designed to simplify the development of applications with artificial intelligence (AI) capabilities, aiming to avoid unnecessary complexity....

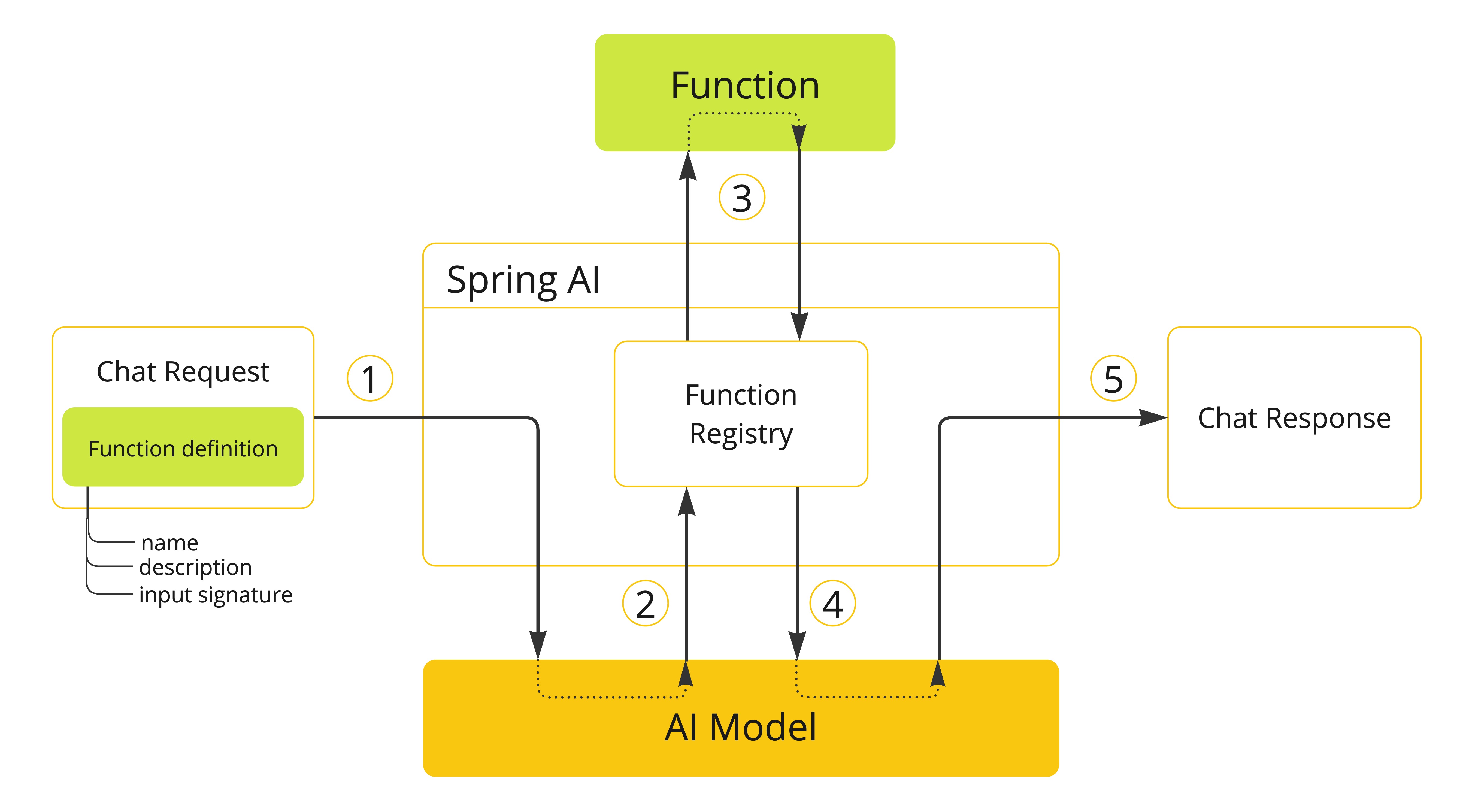

Nova models support Tool/Function Calling for integration with external tools.

@Bean

@Description("Get the weather in a location. Return temperature in Celsius or Fahrenheit.")

public Function<WeatherRequest, WeatherResponse> weatherFunction() {

return new MockWeatherService();

}

String response = ChatClient.create(this.chatModel)

.prompt("What's the weather like in Boston?")

.function("weatherFunction") // bean name

.inputType(WeatherRequest.class)

.call()

.content();

VMware Tanzu Platform 10 integrates Amazon Bedrock Nova models through the VMware Tanzu AI Server, powered by Spring AI. This integration provides:

For more information about deploying AI applications with Tanzu AI Server, visit the VMware Tanzu AI documentation.

The integration of Spring AI with Amazon Bedrock Nova models via the Converse API enables powerful capabilities for building advanced conversational applications. Nova Pro and Lite provide comprehensive tools for developing multimodal experiences across text, images, videos, and documents. Function calling extends these capabilities further by enabling interaction with external tools and services.

Start building advanced AI applications with Nova models and Spring AI today!