Get ahead

VMware offers training and certification to turbo-charge your progress.

Learn moreIf you want to understand at a strategic level what the implications of VMware’s recently announced acquisition of SpringSource are, there are several good sources, including Steve Herrod’s (CTO of VMware) blog post, Rod Johnson’s commentary, Paul Maritz’s press and analyst call, and Darryl Taft’s insightful piece in eWeek.

In this post I will focus more on what this all means at a technical level, to give you an idea of the kinds of capabilities you can look forward to.

Firstly, let me reiterate that nothing changes with respect to our open source projects and SpringSource product offerings. Nothing changes that is, apart from the fact that we’ll have even more opportunity in the future to add exciting new features to them. Spring 3.0 is coming soon, and we just released milestone 4. dm Server is making rapid progress towards a 2.0 release, and we have some very cool stuff up our sleeves for a forthcoming release of tc Server. The Eclipse tool support for Groovy is generating masses of interest, Grails is pushing on towards a 1.2 release, and exciting things are happening across our Spring projects. All of this will continue at pace.

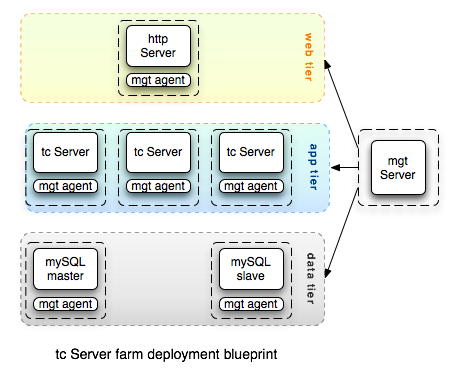

What happens when a Spring-powered application is deployed in a production setting? In a typical scenario there are multiple components working in concert that need to be configured and connected. An http Server load balancing across a set of tc Server instances for example, which in turn talk to a database configured in a master/slave setup. These (middleware) components form logical tiers of an application (using the term application “in the large” now). The logical tiers are mapped to physical tiers in an actual deployment (e.g. you can deploy a database and app server on the same machine, or on different machines). When this terminology was first invented, physical tiers really were physical. But nowadays your physical tier may of course be virtual, and those virtual machines are in turn mapped onto physical resources..... still with me???

Just as we have an application blueprint that describes the components of a Spring-powered application and how they fit together, so a deployment blueprint can describe the components of a given deployment scenario - what components there are, how they are connected and configured, and how cross-cutting concerns such as security, and (anti-)affinity should be handled. As a starting point, there are some common deployment patterns (such as the tc Server farm example I gave earlier) that can be captured in a catalog. In time you can imagine an operations team extending that catalog with their own custom blueprints for application deployment.

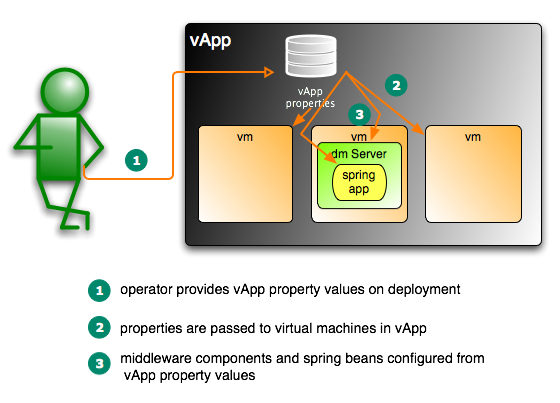

VMware vSphere includes support for a concept known as a vApp. A vApp is “a logical entity comprising one or more virtual machines, which uses the industry standard Open Virtualization Format to specify and encapsulate all components of a multi-tier application, as well as the operational policies and service levels associated with it.”

vApps are the perfect packaging unit for the embodiment of deployment blueprints. The same vApp can be supported in your data center and on a public vCloud. A vApp can also expose configuration properties - an operator provides values for these properties when deploying the vApp.

Beginning with the dm Server (watch out for more details in the forthcoming 2.0.0.M5 release), we’re making it possible for our middleware to be configured via vApp properties. This enables an operator to override ports and other configuration settings when deploying a vApp without needing to know anything about the virtual machines or configuration of middleware components inside. This capability extends beyond the middleware components too, you’ll also be able to configure application properties (that will be dependency injected by Spring) sourced from vApp properties specified by an operator at deployment time.

There are many interesting ways in which these capabilities may be combined, but let me pick on two that I think are illustrative of the potential here: the Platform as a Service (PaaS) model; and the application appliance model.

There are many interesting ways in which these capabilities may be combined, but let me pick on two that I think are illustrative of the potential here: the Platform as a Service (PaaS) model; and the application appliance model.

In the Platform as a Service model your data center, or any of the multiple vendors signed up as vCloud service providers, makes available a catalog of deployment blueprints to choose from. Each of these can be thought of as platform (in the PaaS) sense, to which you can deploy your application. You select the platform you want to deploy to, the corresponding vApp is provisioned on your behalf (perhaps with a web front-end that lets you specify any vApp properties exposed by the blueprint), and then you upload your application artifact(s) to your provisioned and running platform instance. For applications built with Grails or Roo, where we understand even more about the structure of your application, the deployment blueprint selection and artifact uploading can be made available right from the Grails (or Roo) command-line via a plugin. Think of the hosting opportunities for such applications that this model will open up!

In the application appliance model, the development or operations team pick a starting deployment blueprint, create an instance of the corresponding vApp, and install the application artifacts into that running system. So far this looks just like the PaaS model. What happens next is different though. The virtual machines (with the application artifacts now installed) are packaged as a new vApp, and any application-specific properties that may change on each deployment (for example, database URL and password if the vApp relies on an external database) are configured as vApp properties. So now the entire application and everything needed to run it is packaged as a vApp (an application appliance) that can be provisioned as a unit (and version controlled). Putting an application into production then becomes as simply as deploying the vApp - nothing to go wrong, everything is pre-packaged and tested.

Without the knowledge that Spring brings to the table, a vApp is just a collection of virtual machines that vSphere can provision on the physical resources available to it. But things get a lot more interesting when that provisioning is done with knowledge of the application blueprint and the deployment blueprint. Now we suddenly have some understanding of the application and middleware components and how they are connected, and we can optimize the virtual infrastructure to support that. For example:

On the subject of scaling up (or down), the scale points are just another piece of metadata in the deployment blueprint “1..n” (or “3..8”, or whatever you decide) of the servers playing this role. Having specified that, just leave Hyperic HQ and vCenter working in tandem to manage and optimize the number of servers for you (even to the extent of powering off physical machines that aren’t temporarily aren’t needed to save on energy costs) - all based on application SLA and virtual infrastructure SLAs that you specify.